Bidirectional, Native Communication with Kafka

This Kafka Native Add-on, developed using Kafka’s native API, directly integrates MigratoryData with Apache Kafka, eliminating the need for an intermediary layer like Kafka Connect.

System model

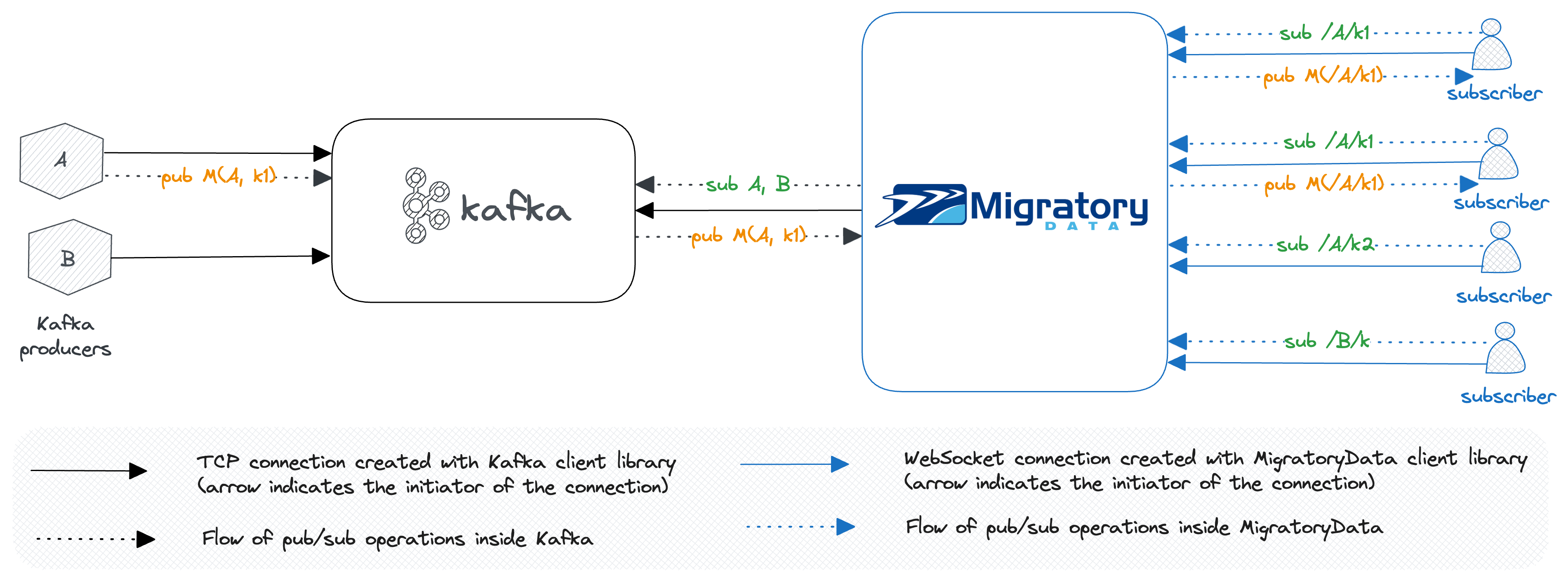

MigratoryData Kafka Edition (KE) consists of MigratoryData with this Kafka native add-on enabled. The diagrams below show the interactions in a MigratoryData KE deployment.

Subscribe Model

MigratoryData KE establishes a TCP connection to Kafka by utilizing Kafka’s client library and can be configured to subscribe to a configurable list of Kafka topics.

An app built with one of MigratoryData’s client libraries establishes a WebSocket connection to MigratoryData KE and can subscribe to one or more MigratoryData subjects.

If MigratoryData KE is configured to subscribe to Kafka topic T, any message M received by

Kafka from a producer on topic T with the key k is delivered to MigratoryData KE. Subsequently,

MigratoryData KE delivers this message M to all application users who have subscribed to the MigratoryData

subject /T/k.

For example, in the diagram above, MigratoryData KE is configured to subscribe to Kafka topics A and

B. When Kafka gets a message M with the topic A and the key k1

from a Kafka producer, it is consumed by MigratoryData KE (because it is subscribed to the topic A). Subsequently, MigratoryData KE delivers the message M

to all subscribers of the subject /A/k1.

For more details on the Kafka topic to MigratoryData subject mapping, please refer to the following section below.

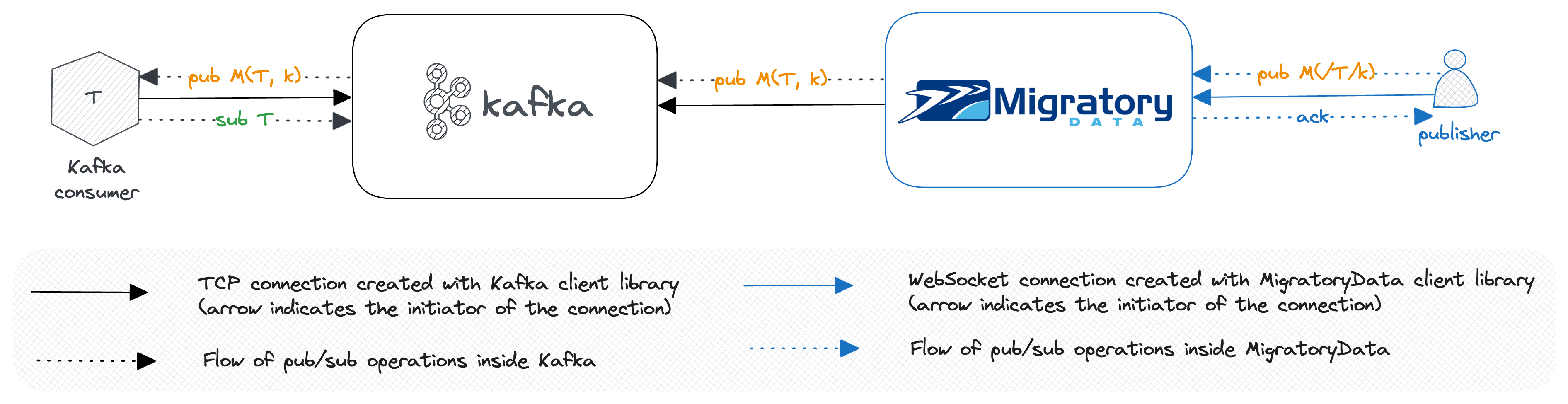

Publish Model

MigratoryData KE establishes a TCP connection to Kafka by utilizing Kafka’s client library.

An app built with one of MigratoryData’s client libraries establishes a WebSocket connection to MigratoryData KE and can publish real-time messages on one or more MigratoryData subjects.

When MigratoryData KE gets a message M with a subject /T/k from a publisher, it delivers

that message M to Kafka on the topic T with the key k.

For more details on the MigratoryData subject to Kafka topic mapping, please refer to the following section below.

Separately Licensed

This Kafka Native Add-on is separately licensed. You may use it for development and testing purposes using the default evaluation license key which is:

LicenseKey = zczuvikp41d2jb2o7j6n

To activate the Kafka Native Add-on for production use, a license key should be obtained from MigratoryData.

Stateless Active/Active Clustering

MigratoryData with Kafka Native Add-on enabled can be deployed as a stateless cluster of multiple independent nodes where Kafka plays the role of communication engine between the nodes. Not sharing any user state across the cluster, MigratoryData with Kafka Native Add-on enabled scales horizontally in a linear fashion both in terms of subscribers and publishers.

Also, the stateless nature of the MigratoryData cluster when using Kafka Native Add-on highly simplifies the cluster management in the cloud, using the elasticity function of the cloud technologies like Kubernetes.

Dynamic Mapping between MigratoryData Subjects and Kafka Topics

Thanks to the compatibility between MigratoryData and Kafka, the mapping between MigratoryData subjects and Kafka topics is automatic, following a simple convention. This eliminates the need to define the mapping in config files.

A MigratoryData subject is a string of UTF-8 characters that respects a syntax similar to the Unix absolute paths. It

consists of an initial slash (/) character followed by one or more strings of characters separated by the slash (/) character, called segments. Within a segment, the slash (/) character is reserved. For example, the following

string /Stocks/NYSE/IBM, composed by the segments Stocks, NYSE, and IBM is a valid MigratoryData subject.

[a-zA-Z0-9._-]. The remaining segments

can use any UTF-8 characters because there is no syntax restriction for keys in Kafka. If a MigratoryData subject

consists of a single segment, then the key of the Kafka topic given by the first segment is null.

Here are some examples of mappings between MigratoryData subjects and Kafka topics:

| MigratoryData Subject | Kafka Topic and Key |

|---|---|

/vehicles/1 |

The Kafka topic is vehicles and the key of the topic is 1 |

/vehicles/1/speed |

The Kafka topic is vehicles and the key of the topic is 1/speed |

/vehicles |

The Kafka topic is vehicles and the key is null |

Enabling the add-on

The Kafka Native Add-on is preinstalled with your MigratoryData server. To enable it, edit the main configuration file

of the MigratoryData server migratorydata.conf and configure the parameter ClusterEngine as follows:

ClusterEngine = kafka

Configuration

This section provides information on how to configure the Kafka Native Add-on.

With Config Files

MigratoryData includes the following configuration files for the Kafka Native Add-on. These files can be found in the

/etc/migratorydata/ folder when using the deb/rpm installers or in the root folder with the tarball installer.

| Configuration File Name | Description |

|---|---|

addons/kafka/consumer.properties |

Config file for built-in Kafka consumers |

addons/kafka/producer.properties |

Config file of built-in Kafka producers |

These two config files have comments and optional parameters besides required parameters. The optional parameters have default values. An optional parameter that is not present in the configuration file will be used with its default value.

The Kafka Native Add-on implements a logic of Kafka consumer group and Kafka producer group. So, there are two types of parameters:

- Kafka-defined parameters

- MigratoryData-specific parameters

Kafka Consumers

The parameters of this section could be defined in the config file for built-in Kafka consumers, i.e. addons/kafka/consumer.properties.

Kafka-defined Parameters

You can use any parameter provided by Kafka’s API for consumers. Please consult the Kafka documentation for details on each of these parameters. Notably, the following Kafka-defined parameters are important for MigratoryData:

| Parameter | Description |

|---|---|

bootstrap.servers |

A comma-separated list of Kafka node addresses where MigratoryData will connect for Kafka cluster discovery |

group.id |

The name of the built-in Kafka consumers group |

MigratoryData-specific Parameters

The following MigratoryData-specific for Kafka consuming are available. Note that the parameters topics or topics.regex are mutually exclusive, specify either one or the other.

topics |

|

|---|---|

| Description | A comma-separated list of Kafka topics to consume |

| Default value | none |

| Required parameter | Required |

topics.regex below.

topics.regex |

|

|---|---|

| Description | A Java-like regular expression giving topics to consume |

| Default value | none |

| Required parameter | Required - note that only the parameter topics or topics.regex should be specified. |

topics above.

consumers.size |

|

|---|---|

| Description | Specify the number of consumers in the built-in Kafka consumers group |

| Default value | 1 |

| Required parameter | Optional |

In order to increase the message consumption capacity, multiple Kafka consumers can be configured using this parameter.

All consumers belong to the Kafka consumer group defined by the Kafka parameter group.id.

recovery.on.start |

|

|---|---|

| Description | Specify whether or not to recover historical messages at start |

| Default value | no |

| Required parameter | Optional |

If this parameter is set on yes, at the start time, MigratoryData will try to recover from Kafka all messages for

the Kafka topics defined by the Kafka parameter topics (or topics.regex) occurred in the last number of seconds defined by the

main parameter of MigratoryData CacheExpireTime which defaults to 180 seconds.

If this parameter is not defined, or if it is set on no, at the start time, MigratoryData will not get any historical messages from Kafka, but starts from the latest offsets found in Kafka for the topics defined by the parameter topics (or topics.regex).

Kafka Producers

The parameters of this section could be defined in the config file for built-in Kafka producers, i.e. addons/kafka/producer.properties.

Kafka-defined Parameters

You can use any parameter provided by Kafka’s API for producers. Please consult the Kafka documentation for details on each of these parameters. Notably, the following Kafka-defined parameters are important for MigratoryData:

| Parameters | Description |

|---|---|

bootstrap.servers |

A list of Kafka node addresses where MigratoryData will connect for Kafka cluster discovery |

partitioner.class |

Partitioner class to distribute messages across topic partitions |

null) are delivered by default to the clients of the MigratoryData server unordered, i.e. using standard delivery. To send all Kafka messages either with or without key in-order and with guaranteed delivery, configure the parameter partitioner.class as com.migratorydata.kafka.agent.KeyPartitioner. In this way,

the messages without key will be always written by the producers to the partition 0, and therefore the order

will be preserved.

MigratoryData-specific Parameters

The following MigratoryData-specific for Kafka producing are available.

producers.size |

|

|---|---|

| Description | Specify the number of producers in the built-in Kafka producers group |

| Default value | 1 |

| Required parameter | Optional |

In order to increase the message production capacity, multiple Kafka producers can be configured using this parameter.

With System Variables

You might use the environment variable MIGRATORYDATA_KAFKA_EXTRA_OPTS to customize various aspects of

your Kafka Native Add-on. It should be defined in one of the following configuration files:

| System configuration file | Platform |

|---|---|

/etc/default/migratorydata |

For deb-based Linux (Debian, Ubuntu) |

/etc/sysconfig/migratorydata |

For rpm-based Linux (RHEL, CentOS) |

MIGRATORYDATA_KAFKA_EXTRA_OPTS |

|

|---|---|

| Description | Specifies various options for Kafka consumer and producer |

| Default value | "" |

| Required parameter | Optional |

Use this environment variable to define the Kafka consumer and producer options or override the value of one or more of these options. Every of these options defined with this environment variable must have the following syntax:

-Dparameter=value

where the value of the parameter should be defined without spaces and quotes.

For example, to configure (or override) the values of the parameters bootstrap.servers and topics of the

built-in Kafka consumers with the values kafka.example.com:9092 and respectively vehicles use:

MIGRATORYDATA_KAFKA_EXTRA_OPTS = \

'-Dbootstrap.servers=kafka.example.com:9092 -Dtopics=vehicles'

Deployment Options

Please refer to the following documentation for

for guidance on deploying a standalone instance of MigratoryData server integrated with Apache Kafka.

Please refer to one of the following documentations for:

to learn how to deploy a cluster of MigratoryData servers integrated with Apache Kafka, or its equivalent cloud services Amazon MSK or Azure Event Hubs.