MigratoryData just released the integration of MigratoryData with Apache Kafka. This is a significant step forward both for Kafka users and MigratoryData users. On one hand, MigratoryData is going to provide to Kafka a scalable extension for Kafka data pipelines across the Web while preserving its key feature like real-time messaging, high availability, guaranteed delivery, message ordering. On the other hand, Kafka is going to provide to MigratoryData a reliable layer for message storage and integration with Kafka data pipelines like stream processing, databases, microservices.

This blog post is neither about why MigratoryData is best suited for extending Kafka messaging to Web and Mobile apps, nor about how to use the integration between MigratoryData and Kafka. For these purposes, please refer to the following documents:

In this blog post, we rather outline the compatibility between MigratoryData and Apache Kafka from an architectural point of view.

Overview

Both Kafka and MigratoryData are sophisticated but easy-to-use distributed publish/subscribe messaging systems which implement:

- clustering

- guaranteed delivery

- high availability (with no single point of failure)

- message ordering

However, they are complementary on other aspects. For example:

-

Kafka is designed for connecting a relatively few number of backend systems across a trusted network, while MigratoryData is designed for delivering messages over the Internet to real-time web and mobile apps with lots of users (millions).

-

Kafka achieves real-time messaging using a pull-based technique (long-polling), while MigratoryData achieves real-time messaging using a push-based technique (websockets), achieving very low latency and little network utilization.

-

Kafka provides persistent message caching (where messages can have TTLs of days, months) while MigratoryData provides in-memory caching (where messages can have TTLs of minutes, hours), delivering instantly cached messages directly from memory to the clients which reconnect to the MigratoryData cluster after a failure.

That being said, from the architectural point of view, both systems use similar concepts to achieve messaging, high availability, guaranteed delivery, and message ordering:

| Kafka | MigratoryData |

|---|---|

| Topic (array of bytes) |

Subject (UTF-8 string) |

| Value (array of bytes) |

Content (UTF-8 string) |

| Offset | (Seqno, Epoch) |

| Key (optional) |

Kafka Concepts

Topics

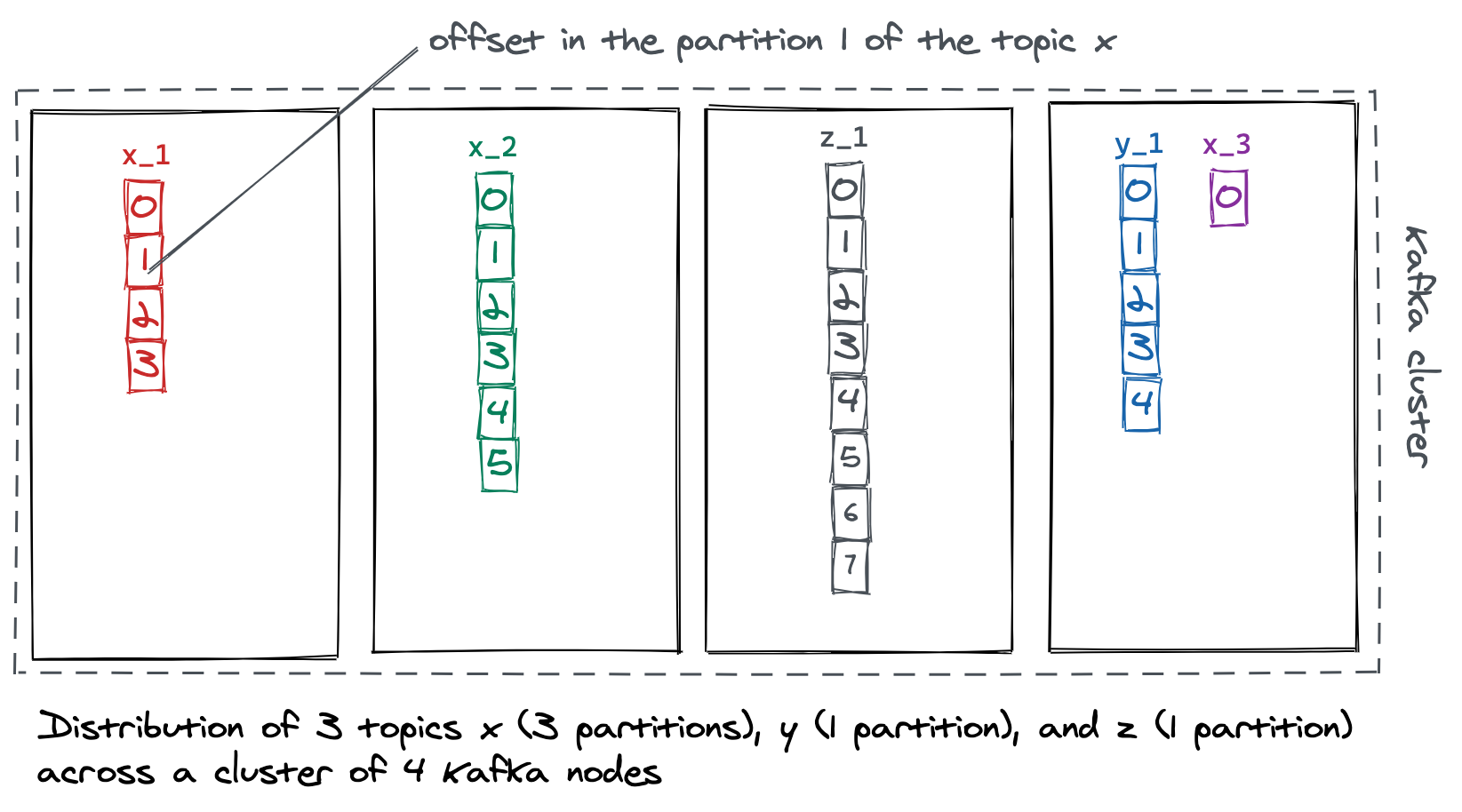

- Kafka topics are distributed across the cluster

- A topic can have multiple partitions, which are also distributed across the cluster

- A message is added to a certain partition according to the key of that message

Replication

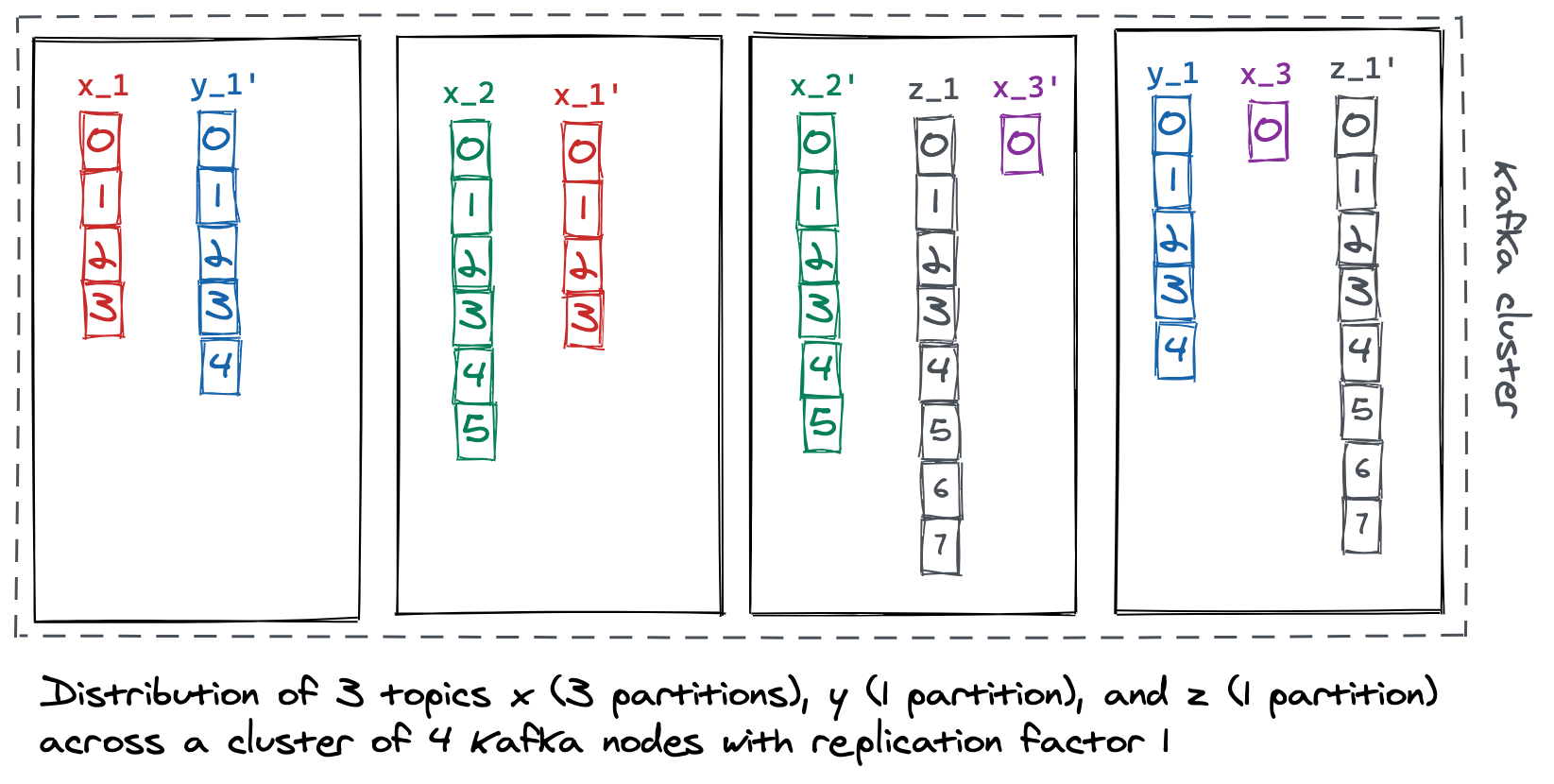

- A message written to Kafka is replicated on one or more nodes (replication factor)

- Each topic partition has replicas on other nodes - among which exactly one is leader

- Message replication is initiated by the leader partition which receives new messages

Ordering

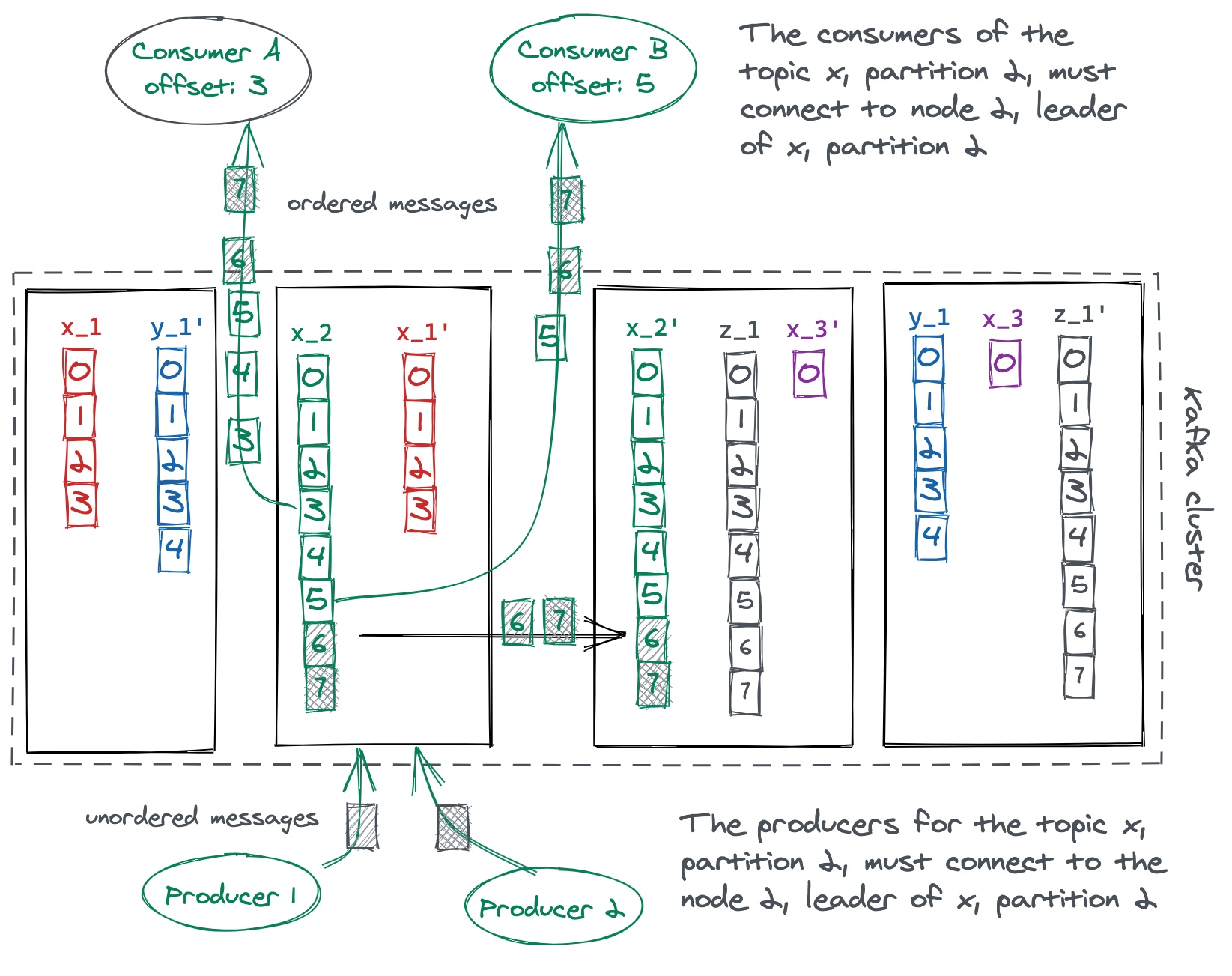

- New messages are written at the end of the leader partition of the topic

- Messages are read from a certain offset from the leader partition of the topic

MigratoryData Concepts

Subjects

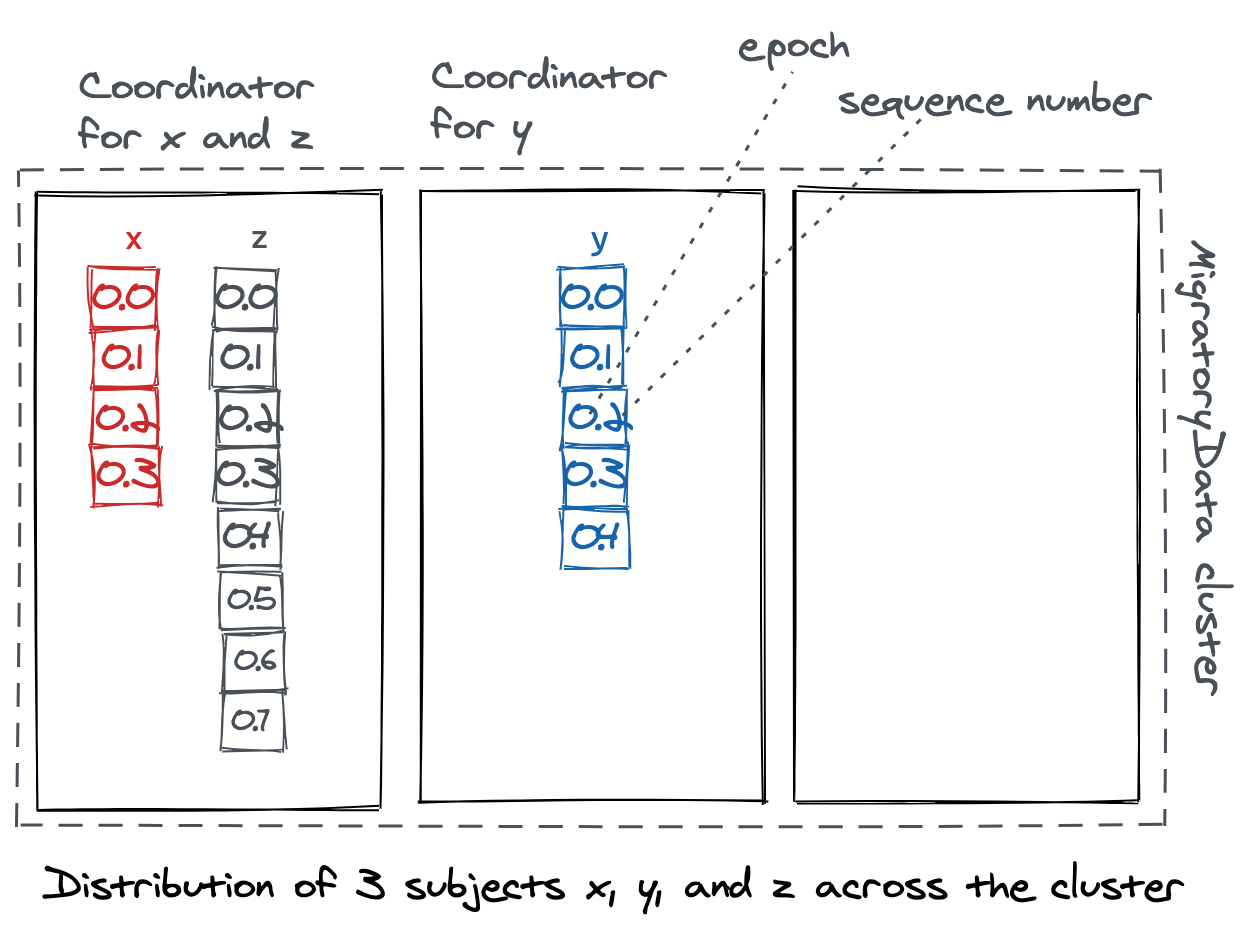

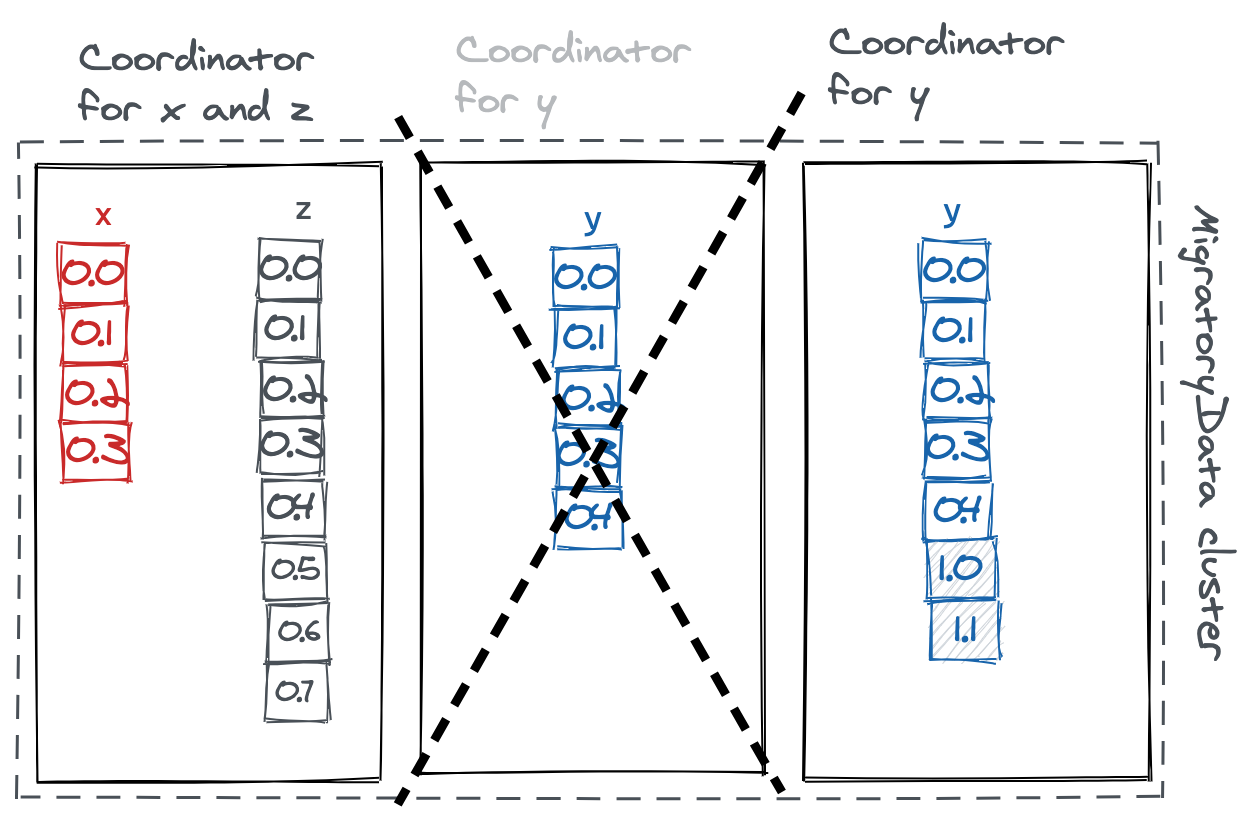

- Subjects are partitioned across the cluster

- Each node of the cluster is the coordinator (leader) for a distinct subset of subjects

Epochs

- If node 2 (coordinator of y) fails, another node is elected to coordinate y

- The epoch number is incremented, the sequence number is reset, but the total order is preserved: (0,0) < (0,1) < (0,2) < (0,3) < (0,4) < (1,0) < (1,1)

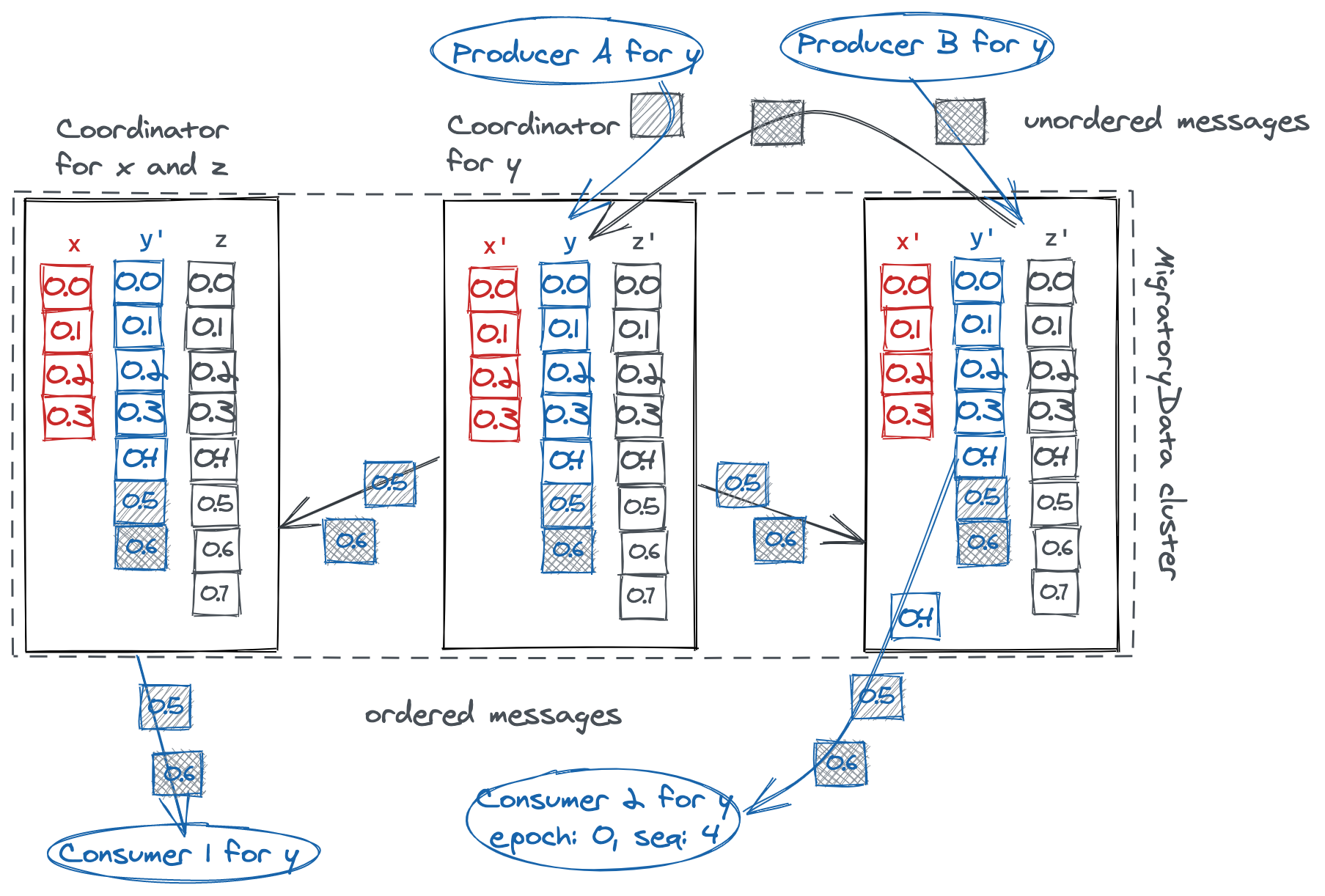

Replication

- Every new message is replicated to the entire cluster

- Message replication is initiated by the coordinator of the subject of that message

- Message is committed after acknowledgements from two nodes (no single point of failure guarantee)

Ordering

- Producers can connect to any cluster member (messages pass through the coordinator)

- Consumers can connect to any cluster member (messages available from local cache)

Conclusion

This is a first blog post about MigratoryData and Kafka integration which outlined the compatibility and complementarity of the two messaging systems.

We will have more blog posts about this integration. In the meantime, have a read through the solution page to learn more about this integration, and to the documentation to learn how to use this integration.

If you experience any issues, have any questions or feedback, please contact us.